Amazon might not have built ChatGPT, but it doesn’t seem likely to suddenly fire and then rehire its CEO either, as OpenAI did this month. As of today, Amazon does have its own AI helper—a new chatbot for Amazon Web Services named, of all things, Q.

Amazon Q is a ChatGPT-style chatbot designed for business users that will be available as part of Amazon’s market-dominating AWS cloud platform. It’s aimed at people who use AWS at work, including coders, IT administrators, and business analysts. In response to typed requests, it will help developers write code, answer questions about how to use AWS cloud services for administrators, and generate business reports by tapping into QuickSight, a business intelligence platform in AWS.

Amazon’s new chatbot is also integrated into Amazon Connect, a customer service platform, to help agents solve support requests. The company says Amazon Q can be customized to a company by accessing proprietary data, and can be programmed to work differently for different employees. It also has security safeguards designed to please wary IT bosses.

Adam Selipsky, CEO of AWS, announced Q at Amazon’s annual cloud conference, re:Invent, along with other AI initiatives, including powerful new Amazon-designed chips for training and deploying AI models like those behind Q and ChatGPT.

Q uses a range of different artificial intelligence models under the hood, including Amazon’s own Titan large language model and LLMs built by Cohere and Anthropic, two well-funded startups competing with OpenAI.

Selipsky points to OpenAI’s near-implosion last week to make the case for companies diversifying their AI providers. “You need not look any further than the events of the past ten days to understand how there will not be one model to rule them all,” he told WIRED ahead of today’s announcement.

When a person sends a query to Q, the bot can answer it using a specific model chosen by a company, or automatically route the question to the best system, although AWS isn’t sharing how. The latter is “more cost-efficient, but also flat out more effective,” Selipsky says.

Since ChatGPT’s launch last November prompted the tech industry to ramp up its investments in AI, Amazon has not led the pack of tech giants trying to compete with OpenAI. Microsoft rapidly added generative AI to its search engine Bing and is now rolling out its Copilot AI assistant throughout its products, thanks to a partnership with OpenAI that saw Microsoft pledge $13 billion in investment into the startup. Google has pivoted to developing and deploying generative AI technology that it had previously held back from public release across a wide range of offerings with a new sense of urgency.

OpenAI’s rapid rise appeared to contribute to a crisis at the company last week, when the company’s nonprofit board fired CEO Sam Altman on November 17. After a chaotic few days in which Altman agreed to join Microsoft and most of OpenAI’s staff threatened to quit, Altman finally negotiated a return. As part of the deal, OpenAI replaced all but one of its directors, did not return Altman to his board seat, and will commission an independent investigation into his conduct.

Selipsky hopes Amazon’s slower-and-steadier approach will appeal to its cloud computing customers, especially those wary of the shortcomings of ChatGPT-style chatbots, such as the way they “hallucinate” information and can capture or repeat private information. With more than 30 percent of the US cloud market—more than any other company—AWS has plenty of potential customers for Q and other AI offerings. Selipsky says there has been a huge amount of inbound demand.

“Many existing generative-AI-powered chat assistants are great for consumers, and you can do really cool things with them, but they lack a lot of the features that you would need to make them truly useful at work,” Selipsky says.

Amazon is making other moves to catch up in generative AI. In September, it said it would introduce ChatGPT-like capabilities to Alexa, its voice assistant. Earlier this month, Reuters reported that Amazon has dedicated a team to building a language model, code-named Olympus, that will be larger than OpenAI’s GPT-4, which powers ChatGPT, perhaps promising better capabilities. That suggests OpenAI will see more intense competition next year. Google is also working on a more powerful AI model, known as Gemini.

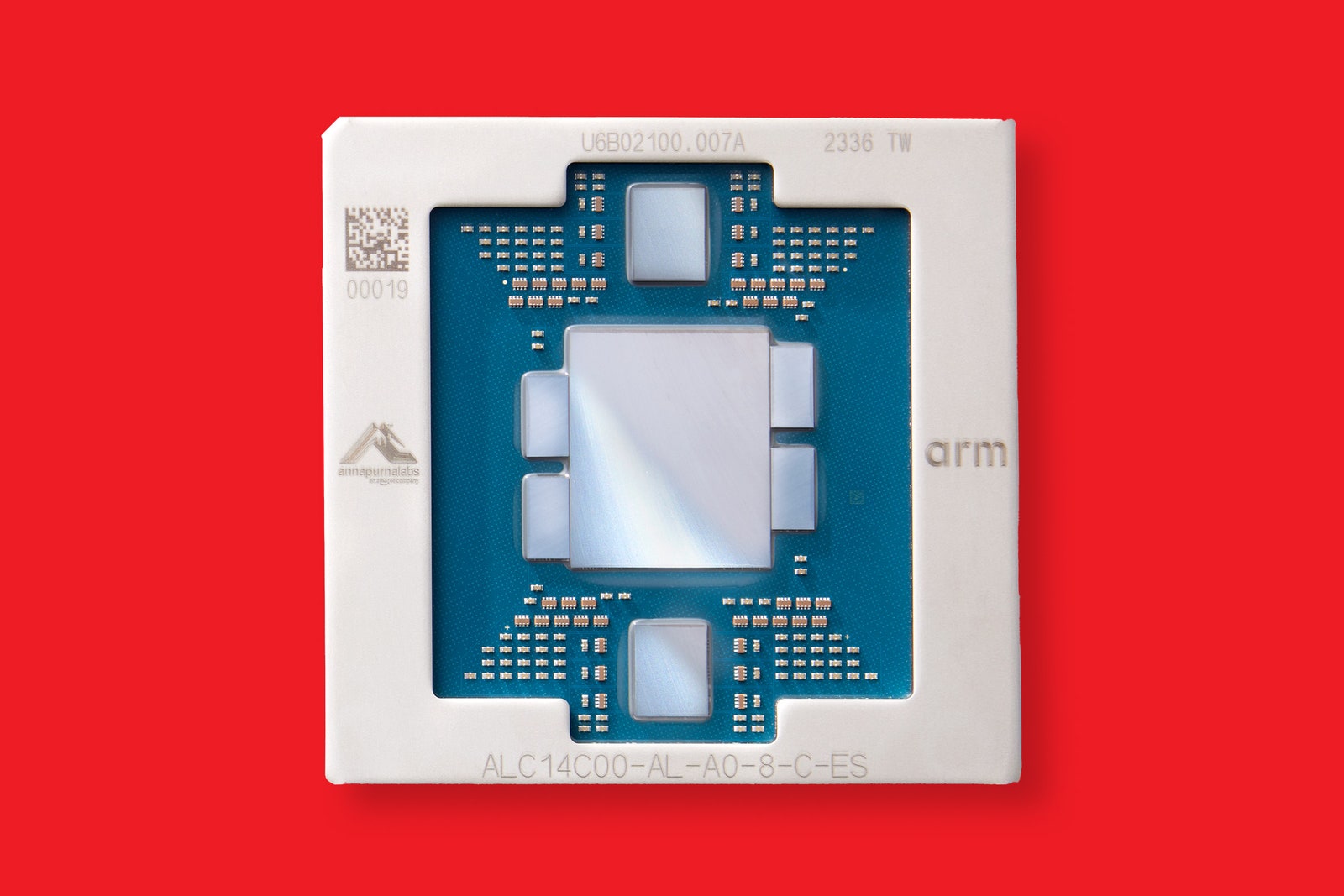

AWS also announced two new AI chips available to its customers today. Access to powerful processors is an essential component for building large language models, and Amazon, Google, and a number of startups are competing with Nvidia, whose GPUs are standard in AI projects.

Amazon says its new fourth generation of silicon for running AI models, Graviton 4, offers 30 percent better performance than the previous model, and that its second generation of chip for training AI models, Trainium 2, is four times as fast and twice as energy-efficient as its predecessor. As part of Amazon’s $4 billion investment in Anthropic, whose Claude chatbot competes with ChatGPT, the startup has agreed to use AWS silicon to train its models.

Source : Wired