The ability to simulate the way matter behaves on the atomic scale is revolutionizing materials science and everything related to it. This approach is producing new materials with exotic properties, resilient alloys for nuclear power and a new understanding of protein folding, to name just a few applications.

These advances are largely the result of ever more powerful computing machines, which aim to make simulations bigger, faster and longer.

That’s the goal but the reality is more nuanced. More powerful computers have allowed researchers to simulate huge blobs of matter containing trillions of atoms. But even on the world’s most powerful exascale computers, a full month of compute produces just a few microseconds of simulated time.

Even worse, adding more CPUs doesn’t help much. That’s because the time it takes to transmit information between these chips is the limiting factor in these calculations. Adding more chips just makes this bottleneck worse.

Atomic Impact

Now all that is changing thanks to the work of Kylee Santos at Cerebras Systems, a Californian chip maker, along with scientists from the Sandia, Lawrence Livermore and Los Alamos National Laboratories. This group has found a way to speed up atomic scale simulations by two orders of magnitude with results that could have a transformational impact on materials science.

The time delays introduced by interconnects between chips is a well-known issue in the largest-scale computations, where tens of thousands of chips have to be wired together.

These chips generally have many cores, each capable of computing independently of the others. Indeed, high-end desktop CPUs routinely have dozens of cores. The big advantage is that because the cores are all carved on the same slab of silicon, they can swap data rapidly.

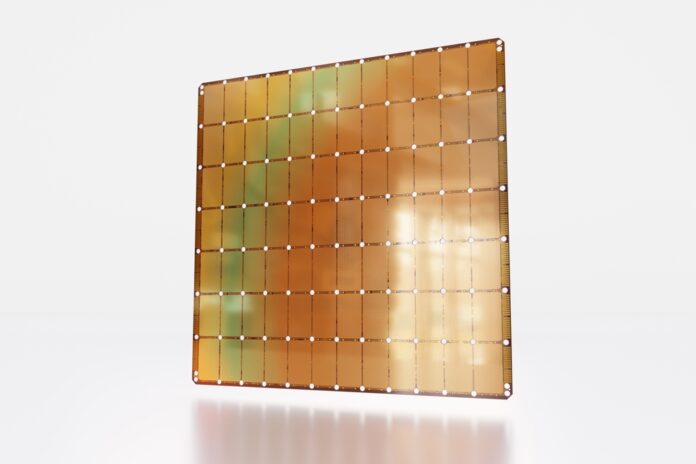

Cerebras was founded to eliminate a curious artifact of chip manufacture. Silicon chips begin life as circular wafers of pure silicon, each about the size of a large dinner plate. These wafers host dozens or hundreds of chips, depending on their size. At the end of the fabrication process, the wafers are broken up into individual chips, which are then sold separately.

But in supercomputers, the chips must be connected back together again so that they swap data. Cerebras’s big idea is to turn an entire silicon wafer into a single giant chip, which the company calls a “wafer-scale engine.” The big advantage is that inside these wafers, the cores can all communicate at speed.

The result is an extraordinary chip. The company’s Wafer-Scale Engine-2 (WSE-2) is a single chip that houses 850,000 cores, with 40 GB of on chip memory and a memory bandwidth of 20 petabits per second. All this draws 23 kilowatts of power — for a single chip!

It is this machine that Santos and colleagues have put through its paces to simulate the behavior of matter. The team use each core to simulate a single atom in a slab of material that contains over 800,000 of them. That’s equivalent to a sliver about 60 x 60 x 2 nanometers in size.

Grain Boundary

A key problem in materials science is understanding how one crystal of metal interacts with the crystal next to it when they are oriented in different directions. These so-called grain boundaries are complex because, although the atoms are perfectly arranged within the crystals, at the boundaries they have more complex arrangements that change over time.

The simulations need to account for atomic vibrations that occur on the scale of femtoseconds while simulating physical and chemical changes, such as changes in an atom’s position relative to its neighbors, that emerge on the scale of microseconds. That’s a difference in scale of nine orders of magnitude. That’s why achieving longer simulation times is so important.

Now Santos and co have done it for copper, tungsten and tantalum and further advances look eminently possible. “By dedicating a processor core for each simulated atom, we demonstrate a 179-fold improvement in timesteps per second,” say Santos and co. That makes it possible to achieve in a single day the equivalent of 6 months of runtime on a conventional exascale computer.

Santos and co say this should bring huge dividends when it is applied to open problems in materials science. “Reducing every year of runtime to two days unlocks currently inaccessible timescales of slow microstructure transformation processes that are critical for understanding material behavior and function,” they say.

“Our work demonstrates that novel wafer-scale computer architectures can achieve a major increase in the maximum simulation rate of complex atomistic systems.”

And more advances are expected soon. The current work used the Cerebras WSE-2 wafer, which the company unveiled in 2021. Earlier this year, it launched the WSE-3, designed specifically for AI applications. Its capabilities will be well worth watching.

Ref: Breaking the Molecular Dynamics Timescale Barrier Using a Wafer-Scale System: arxiv.org/abs/2405.07898

Source : Discovermagazine