Plato’s Theory of Forms addresses the question of how we know that an object, such as a table, is what it is, even though we may never have seen it before and even though it might look entirely different to any table we have ever seen.

Plato’s answer is that a table gets its “tableness” because it partakes of the perfect Form of a table. This Form is an ideal, absolute, timeless entity that is the essence of tableness but that we cannot experience directly.

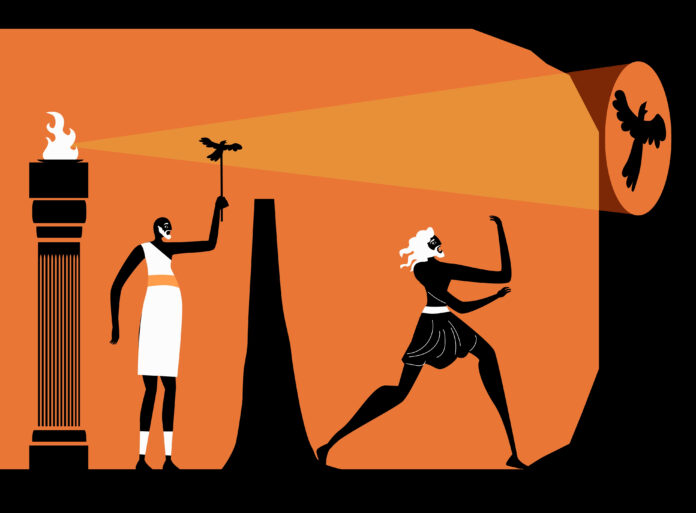

In his Allegory of the Cave, Plato famously explained that the world of Forms is not accessible to human senses and that all we experience are the imperfect shadows of Forms, as if they were cast by firelight onto the walls of a cave.

Plato’s Theory of Forms does not figure in the modern view of the universe, which we experience directly and indirectly, and come to understand through the iterative process of science. In this way, humans tend to agree on what makes up the universe and how it behaves.

Curated Data

But artificially intelligent systems, like large language models and machine vision systems, learn about the world in a different way. Everything they know comes from curated sets of words, images and other forms of data.

And that raises the question of whether these systems end up having a similar “view” of the world. And whether these views are converging as AI systems become more capable.

Now we get an answer to this question thanks to the work of Minyoung Huh and colleagues at the Massachusetts Institute of Technology in Cambridge. This group say that as neural networks become more powerful, their representations of the world are becoming more aligned. This appears to be true regardless of the type of model, whether it be a vision system, a language model or some other system.

“This similarity spans across different model architectures, training objectives, and even data modalities,” they say, adding that they think they know why this convergence is happening and where it will end.

That’s where Plato’s Theory of Forms comes in. Huh and co say the reason these systems are converging is that they are all attempting to arrive at a representation of the same reality. This reality generates the data points used to train these models so it shouldn’t be surprising that the models become aligned in their representation of this data.

“We call this converged hypothetical representation the “platonic representation” in reference to Plato’s Allegory of the Cave, and his idea of an ideal reality that underlies our sensations,” they say.

The team go on to test this hypothesis using neural networks that represent the world in terms of vectors and their relationship to each other. For example, one way to represent words is to look at the probability that a word will appear next to another word, then another word and eventually all other words. This set of probabilities can be represented as a vector that places the word in a specific position within a multi-dimensional vector space.

Using this approach, computer scientists can study the patterns that emerge within this vector space. They have discovered that words with similar functions often form clusters; colors, for example, or positive and negative emotions.

And the position of words within this vector space is largely invariant across different languages. So the process of translating a sentence from one language to another is equivalent to mapping the pattern of links between words in one language space to the same pattern in another language space.

Neural networks trained on images produce similar vector representations.

That allows Huh and co to measure the distances between similar concepts in these spaces and then compare them. They find that “the ways by which different neural networks represent data are becoming more aligned.” And the alignment improves as the networks become bigger and more powerful.

“This suggests model alignment will increase over time – we might expect that the next generation of bigger, better models will be even more aligned with each other,” say Huh and co.

Human Perception

What’s more, these systems are also aligning with the way the human brain perceives the world. That shouldn’t be surprising, says Huh and co. “Even though the mediums may differ – silicon transistors versus biological neurons – the fundamental problem faced by brains and machines is the same: efficiently extracting and understanding the underlying structure in images, text, sounds, etc.”

The researchers use this way of thinking about neural networks to make some remarkable predictions. They point out, for example, that neural networks work by finding a simple representation that fits a given data set.

This bias towards simplicity suggests that as models get bigger, they should converge towards a smaller solution space. The endpoint in this process, they say, should be a unified model grounded in the statistical properties of the underlying world. “We hypothesize that this convergence is driving toward a shared statistical model of reality, akin to Plato’s concept of an ideal reality,” say Huh and co.

One limitation of this approach is that the work focuses on language and image data sets, when there are many other types of perception that may not be aligned in the same way. It’s not easy to imagine how well touch or smell, for example, can become aligned with a database of images or words.

But the work suggests that these different modalities or perceptions contribute to a better model of the real world. That’s consistent with the so-called “Anna Karenina scenario” based on the famous first line from Tolstoy’s novel: “Happy families are all alike; every unhappy family is unhappy in its own way.”

In this spirit, say in Huh and co, all large neural networks are good in a similar way but all small neural networks are bad in different ways. The shocking implication is that although humans and computers currently perceive the world in different ways, one day this perception will be essentially the same. Plato would surely approve!

Ref: The Platonic Representation Hypothesis : https://arxiv.org/abs/2405.07987

Source : Discovermagazine