Last week, an AI Overview search result from Google used one of my WIRED articles in an unexpected way that makes me fearful for the future of journalism.

I was experimenting with AI Overviews, the company’s new generative AI feature designed to answer online queries. I asked it multiple questions about topics I’ve recently covered, so I wasn’t shocked to see my article linked, as a footnote, way at the bottom of the box containing the answer to my query. But I was caught off guard by how much the first paragraph of an AI Overview pulled directly from my writing.

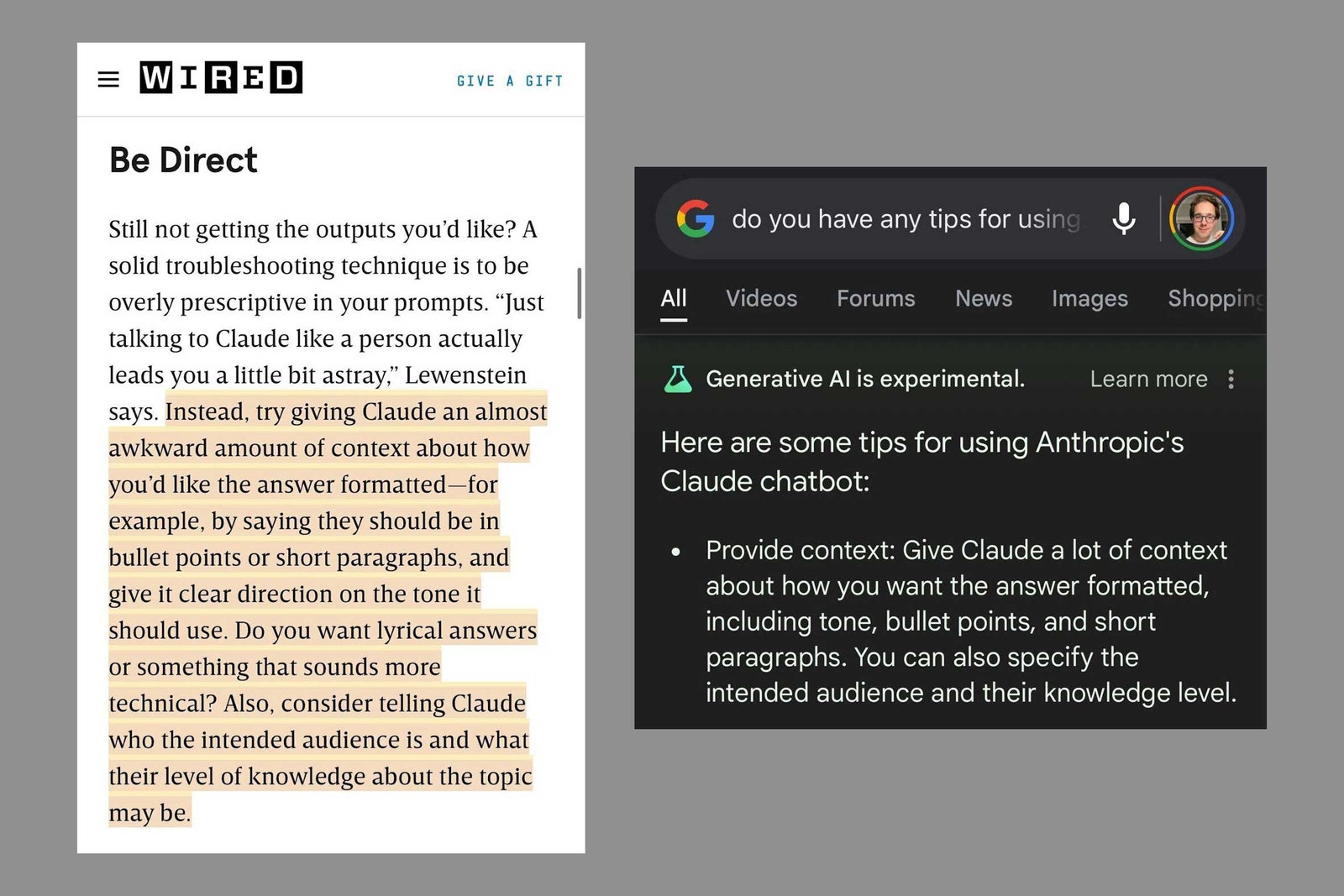

The following screenshot on the left is from an interview I conducted with one of Anthropic’s product developers about tips for using the company’s Claude chatbot. The screenshot on the right is a portion of Google’s AI Overview that answered a question about using Anthropic’s chatbot. Reading the two paragraphs side by side, it feels reminiscent of a classroom cheater who copied an answer from my homework and barely even bothered to switch up the phrasing.

Without the AI Overviews enabled, my article was often the featured snippet highlighted at the top of Google search results, offering a clear link for curious users to click on when they were looking for advice about using the Claude chatbot. During my initial tests of Google’s new search experience, the featured snippet with the article still appeared for relevant queries, but it was pushed beneath the AI Overview answer that pulled from my reporting and inserted aspects of it into a 10-item bulleted list.

In email exchanges and a phone call, a Google spokesperson acknowledged that the AI-generated summaries may use portions of writing directly from web pages, but they defended AI Overviews as conspicuously referencing back to the original sources. Well, in my case, the first paragraph of the answer is not directly attributed to me. Instead, my original article was one of six footnotes hyperlinked near the bottom of the result. With source links located so far down, it’s hard to imagine any publisher receiving significant traffic in this situation.

“AI Overviews will conceptually match information that appears in top web results, including those linked in the overview,” wrote a Google spokesperson in a statement to WIRED. “This information is not a replacement for web content, but designed to help people get a sense of what’s out there and click to learn more.” Looking at the word choice and overall structure of the AI Overview in question, I disagree with Google’s characterization that the result may be just a “conceptual match” of my writing. It goes further. Also, even if Google developers did not intend for this feature to be a replacement of the original work, AI Overviews provide direct answers to questions in a manner that buries attribution and reduces the incentive for users to click through to the source material.

“We see that links included in AI Overviews get more clicks than if the page had appeared as a traditional web listing for that query,” said the Google spokesperson. No data to support this claim was offered to WIRED, so it’s impossible to independently verify the impact of the AI feature on click-through rates. Also, it’s worth noting that the company compared AI Overview referral traffic to more traditional blue-link traffic from Google, not to articles chosen for a featured snippet, where the rates are likely much higher.

While many AI lawsuits remain unresolved, one legal expert I spoke with who specializes in copyright law was skeptical whether I could win any hypothetical litigation. “I think you would not have a strong case for copyright infringement,” says Janet Fries, an attorney at Faegre Drinker Biddle & Reath. “Copyright law, generally, is careful not to get in the way of useful things and helpful things.” Her perspective focused on the type of content in this specific example of original work, explaining that it is quite difficult to make a claim about instructional or fact-based writing, like my advice column, versus more creative work, like poetry.

I’m definitely not the first person to suggest focusing on your intended audience when writing chatbot prompts, so I agree that the fact-based aspect of my writing does complicate the overall situation. It’s hard for me, though, to imagine a world where Google arrives at that exact paragraph about Claude’s chatbot in its AI Overview results without referencing my work first.

Another copyright expert I spoke with was less certain about what would happen in court for a hypothetical copyright case based on my findings. “I still think we could put this in front of a jury,” says Kristelia García, a professor at Georgetown University Law Center. “And see if that jury would find it to be ‘substantially similar,’ which is the legal standard.”

To be clear, this is all hypothetical. I have no plans to bring litigation against Google. This reporting was done in the interest of informing the public about the impact of artificial intelligence and fostering useful discussion.

Whether or not Google infringed on WIRED’s copyright in this situation, I feel certain that if the company decides to expand the prevalence AI Overviews, then the feature will dramatically transform digital journalism, likely for the worse. Nilay Patel, cofounder and editor in chief at The Verge, often mentions the concept of “Google Zero,” or the day when publishers wake up and see that their tenuous traffic from the web’s largest referrer has fizzled out. Google’s dominant control over how people search the internet puts the company in a unique position to snuff out traffic, and potentially entire publications, by changing how its service functions.

With the journalism industry being already strapped for cash, it’s easy to understand why corporate leaders at publications who are anxious about recent AI developments are rushing to sign licensing deals with major AI companies. The Associated Press, The Atlantic, the parent company of Business Insider and Politico, the Financial Times, Vox Media, the parent company of The Wall Street Journal, and digital publishing giant Dotdash Meredith all have contracts with OpenAI. Nevertheless, rank-and-file workers may not be thrilled about how their writing is sold off in these contracts.

The work of reliable journalists is clearly valuable to these AI-focused companies, even if they don’t pay to license the content from publishers. In a recent blog post from Liz Reid, Google’s head of search, she blamed user-generated content, specifically discussion forums, for why AI Overviews told one user to add nontoxic glue to pizza for extra cheese stickiness. The Reddit comment in question may have been from a user named “fucksmith.” By offering up direct, AI-summarized answers to user questions, Google places more onus on itself to get facts straight and highlight quality information.

And if you’re going to copy off someone’s homework, you’d better make sure it’s a straight-A student’s paper—not some troll on Reddit.

Source : Wired