We’re about to enter the Apple Intelligence era, and it promises to dramatically change how we use our Apple devices. Most importantly, adding Apple Intelligence to Siri promises to resolve many frustrating problems with Apple’s “intelligent” assistant. A smarter, more conversational Siri is probably worth the price of admission all on its own.

But there’s a problem.

The new, intelligent Siri will only work (at least for a while) on a select number of Apple devices: iPhone 15 Pro and later, Apple silicon Macs, and M1 or better iPads. Your older devices will not be able to provide you with a smarter Siri. Some of Apple’s products that rely on Siri the most–the Apple TV, HomePods, and Apple Watch–are unlikely to have the hardware to support Apple Intelligence for a long, long time. They’ll all be stuck using the older, dumber Siri.

This means that we’re about to enter an age of Siri fragmentation, where saying that magic activation word may yield dramatically different results depending on what device answers the call. Fortunately, there are some ways that Apple might mitigate things so that it’s not so bad.

Making requests personal

While Apple Intelligence will mean that tomorrow’s Siri will be able to consult a detailed semantic index of your information in order to intelligently process your requests, even today’s dumber Siri uses information on your device to perform tasks. That’s a problem for a device like the HomePod, which isn’t your phone, doesn’t run apps, and has a very limited knowledge of your personal status.

To work around this, Apple created a feature called Personal Requests, which lets you connect your HomePod (and personal voice recognition!) to an iPhone or iPad. When you make a request to Siri on the HomePod that requires data from your iPhone, the HomePod seamlessly processes that request on your more capable device and then gives you the answer.

This sounds like a potential pathway for a workaround to allow Apple devices that can’t support Apple Intelligence to still take advantage of it. Of course, it would increase latency somewhat, but if you’ve got an Apple Intelligence-capable iPhone or iPad nearby, it could potentially make your HomePods much more useful. The same approach could work with an Apple Watch and its paired iPhone. (I can also imagine a future, high-end version of the Apple TV that would be able to execute Apple Intelligence requests and be an in-home hub to handle requests made by lesser devices.)

No, it’s not as ideal as having your device process things itself, but it’s better than the alternative, which is Siri fragmentation.

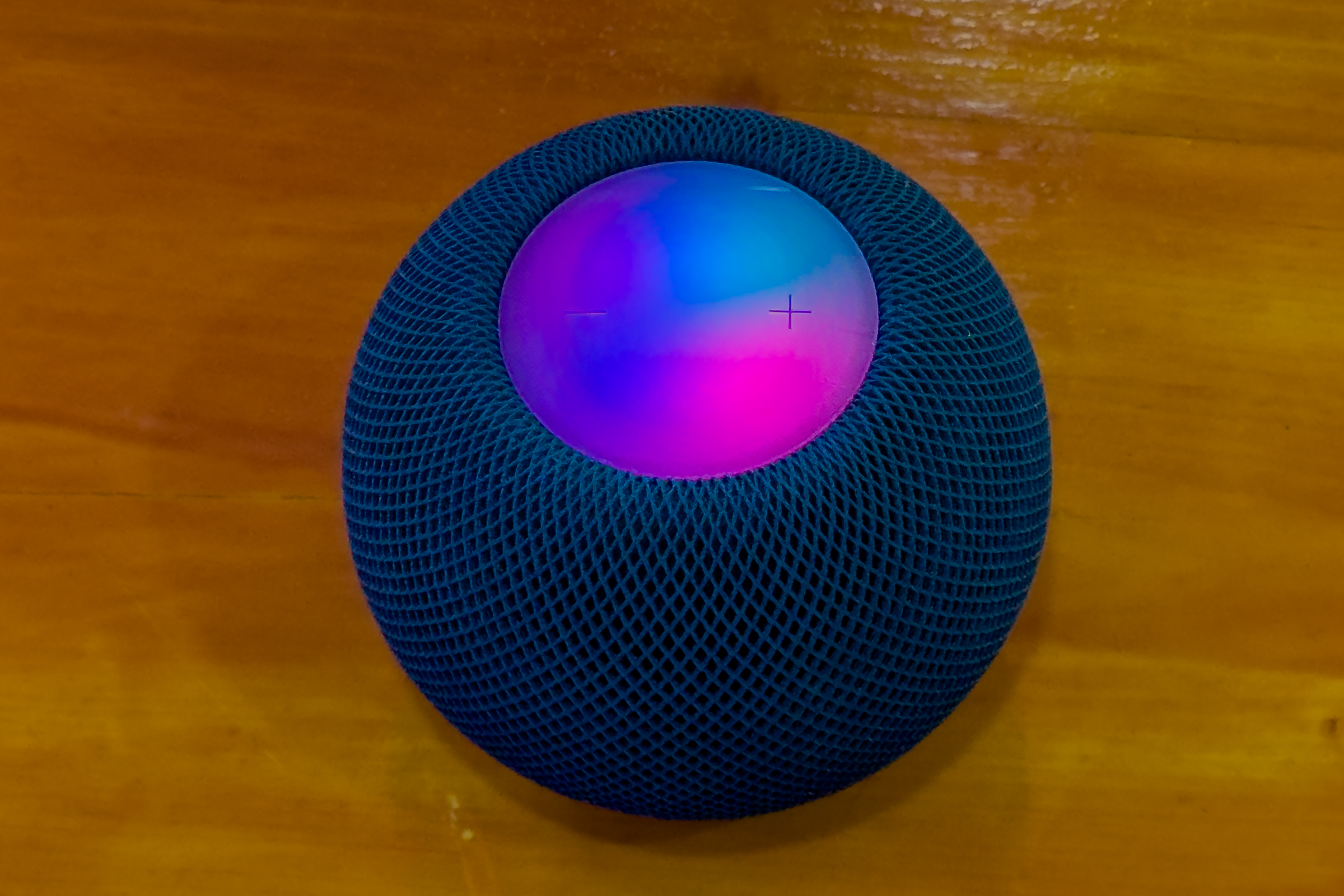

It’s possible that if you have an iPhone compatible with Apple Intelligence,

Apple could use Personal requests so you an use the “smarter” Siri.

Foundry

Private Cloud Compute for more devices

When Apple Intelligence launches, Apple will process some tasks on devices and send other tasks to remote servers It manages, using a cloud system It built called Private Cloud Compute. Apple officials told me that while an AI model will determine which tasks are computed locally and which ones are sent to the cloud, at the start, all devices will follow the same rules for that transfer.

Put another way, an M1 iPad Pro and an M2 Ultra Mac Pro will process requests locally or in the cloud in exactly the same way, even though that Mac Pro is vastly more powerful than that iPad. I’m skeptical that this will remain the case in the long term, but right now, it allows Apple to focus on building one set of client-side and server-side models. It also specifically limits how many jobs are being sent to the brand-new Private Cloud Compute servers.

If you hadn’t noticed, Apple seems to be rushing to get Apple Intelligence out the door as quickly as possible, and building extra models in different places and adding more load to the new servers is not in the launch plan. Fair enough.

But consider a second phase of Apple Intelligence. Apple could potentially tweak its existing model to better balance which devices use the cloud and which use local resources, maybe letting the higher-powered devices with lots of storage space and RAM process a bit more locally. At that point, the company could also potentially let some other devices use Private Cloud Compute for tasks—like, say, Apple Watches and maybe Apple TVs.

The first phase of Private Cloud Compute

doesn’t adjust the amount of local processing performed, even if your device offers more power.

Thiago Trevian/Foundry

Given that Apple Intelligence requires parsing personal data in the semantic index, it’s possible that Apple Watch and Apple TV don’t even have the power to compose a proper Apple Intelligence request. However, perhaps future versions could clear that lower bar and become compatible (by a more limited definition of the term).

Selective listening

Another tweak Apple could try is simply to change how its devices listen for commands. If you’ve ever issued a Siri request while in a room full of Apple devices, you might have wondered why they don’t all respond at the same time. Apple devices actually use Bluetooth to talk to each other and decide which device should respond based on whether your voice is clearer or if you’ve recently been using a specific device. If a HomePod is around, it generally takes precedence over other devices.

This is a protocol that Apple could change so that if an Apple Intelligence-capable device is within earshot, it gets precedence over devices with dumber versions of Siri. If Apple Intelligence is really so smart, it might also be smart enough to realize that if it’s in a room with a HomePod and you’re issuing a media play request, you probably want to play that media on the HomePod.

The duck keeps paddling

These all seem like obvious paths forward for Apple so that it can avoid a lot of user frustration when Siri responds in very different ways depending on what device answers the call. But I don’t expect any of them to be offered this year or maybe even until mid- or late next year.

It’s not that Apple hasn’t anticipated that this will be a problem. In fact, I’m almost positive that it has. The people who work on this are smart. They know the ramifications of Apple Intelligence being in a small number of Apple devices, and they know it’ll be years before all the older, incompatible devices cycle out of active use. (The HomePods in my living room just became “vintage”.)

But as I mentioned earlier, Apple Intelligence is not your normal kind of Apple feature roll-out. This is a crash project designed to respond to the rise of large language models over the last year and a half. Apple is moving fast to keep up with the competition. It looks cool and composed in its marketing, but like the proverbial duck, it is paddling furiously beneath the service.

Apple’s primary goal is to ship a functional set of Apple Intelligence features soon on its key devices. Even there, it’s admitted that it will still be rolling features out into next year, and all of it will only work in US English initially.

I hope the new Siri with Apple Intelligence is really good–so good that it makes the older Siri pale in comparison. Then, I hope that Apple takes some steps, as soon as it can, to minimize the number of times any of us have to talk to the old, dumb one.

Source : Macworld