Chip giant Nvidia reported its third-quarter earnings earlier today, and all ears—and presumably some watch parties—were tuned in to try to determine what the company’s performance might mean for the near future of the artificial intelligence industry as a whole.

The fate of the company’s newest AI chip, Blackwell, was a major focus after production issues caused shipments to be delayed for several months earlier this year. On Sunday, The Information also reported that Blackwell chips were overheating when connected together in Nvidia’s customized server racks, prompting the company to make design changes.

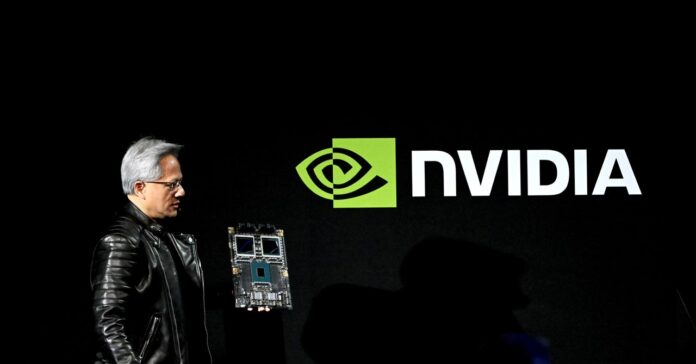

But Nvidia cofounder and CEO Jensen Huang told analysts and investors on its earnings call today that now Blackwell production “is in full steam.”

“We will deliver this quarter more Blackwells than we had previously estimated,” Huang said. Some of Nvidia’s most important customers, like Microsoft and OpenAI, have already received the new chips, and Blackwell sales could end up generating several billion dollars in revenue for the company next quarter, executives said.

Some industry analysts reported earlier that problems with the new chips had been fixed. Dylan Patel, chief analyst at the research firm SemiAnalysis, told Business insider that the Blackwell overheating issues “have been present for months and have largely been addressed.”

Patrick Moorhead, founder and chief analyst of Moor Insights & Strategy, told WIRED that his manufacturing contacts were unaware of any major overheating issues. But it’s not unusual, he said, for there to be tension between design, engineering, and production when bringing a new chip design to market.

“It’s a debate as old as semiconductors: Is it a design issue? Is it a production issue?” Moorehead said. “People are going to look for any type of smoke that can get in the way of the thesis of [Nvidia’s] dramatic growth.”

Nvidia generated $35.1 billion in revenue for the quarter, beating estimates of $33.2 billion. Its revenue rose 94 percent compared to last year. That’s a “small” rise compared to a few recent quarters, when its revenue rose as much as 265 percent on an annual basis—but Nvidia isn’t going out of business anytime soon. The results indicate that the AI market is continuing to boom as companies pour billions of dollars into buying advanced chips and other equipment, albeit at a slightly slower pace.

A large portion of Nvidia’s growth this quarter was driven by data center revenue, totaling $30.8 billion for the quarter, which was up 112 percent from last year. The company’s gross profit margin was 74.5 percent, essentially flat from a year ago. But analysts expect that Nvidia’s margins could shrink as the company shifts to producing more Blackwell chips, which cost more to make than their less advanced predecessors.

Nvidia’s earnings reports are seen as an important bellwether for the AI industry as a whole. The chip architect’s advanced GPUs, which power complex neural network processing, are what made the current generative AI boom possible. As Silicon Valley giants raced to build new chatbots and image-generation tools over the past few years, Nvidia’s revenue exploded, allowing it to surpass Apple as the most valuable public company in the world. Since the launch of ChatGPT in November of 2022, Nvidia’s stock price has increased nearly tenfold.

Almost every major tech company working on AI, even those building their own processing units, rely heavily on Nvidia GPUs to train their AI models. Meta, for example, has said that it is building its latest AI technology on a cluster of more than 100,000 Nvidia H100s. Smaller AI startups, meanwhile, have been left without enough AI compute power as Nvidia struggled to keep up with demand.

Blackwell, Nvidia’s newest GPU, is made up of two pieces of silicon each equivalent to the size of its previous chip, Hopper, which are combined together into a single component. This design has resulted in a chip that’s supposedly four times faster and with more than double the number of transistors as its predecessor.

But the launch of Blackwell hasn’t been smooth sailing. Originally slated to ship in the second quarter, the new chip hit a production snag, reportedly delaying the rollout by a few months. Huang took responsibility for the problem, calling it a “design flaw” that “caused the yield to be low.” Huang told Reuters in August that Nvidia’s longtime chipmaking partner, Taiwan Semiconductor Manufacturing Company Limited, helped Nvidia correct the issue.

Moorhead told WIRED he remains bullish on Nvidia and is confident that the generative AI market will continue to grow for the next 12 to 18 months, at least, despite some recent reports suggesting AI progress is starting to plateau.

“I think the only way shareholders would have a mutiny is if they were concerned about the capital expenditures or the profitability of the hyperscalers,” Moorhead said, referring to big tech companies like Amazon, Google, Microsoft, and Meta that are heavily invested in AI cloud services. “But I think they’re just going to keep buying up Nvidia until that day actually comes.” Enterprise AI is still an area of growth for Nvidia as well, he added.

On today’s earnings call, Nvidia chief financial officer Colette Kress said Nvidia’s enterprise AI tools are in “full throttle,” including an operating platform that lets other businesses build their own copilots and AI agents. Customers include Salesforce, SAP, and ServiceNow, she said.

Huang echoed the same thing later in the call: “We’re starting to see enterprise adoption of agentic AI,” he said. “It’s really the latest rage.”

Source : Wired