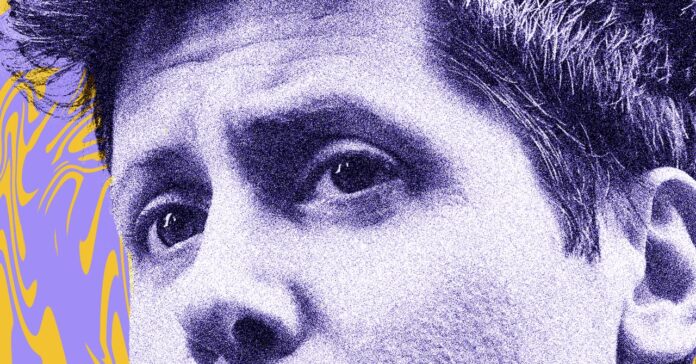

Sam Altman is the king of generative artificial intelligence. But is he the person we should trust to guide our explorations into AI? This week, we do a deep dive on Sam Altman, from his Midwest roots to his early startup days, his time in venture capital, and his rise and fall and rise again at OpenAI.

You can follow Michael Calore on Mastodon at @snackfight, Lauren Goode on Threads and @laurengoode, and Zoë Schiffer on Threads @reporterzoe. Write to us at uncannyvalley@wired.com.

How to Listen

You can always listen to this week’s podcast through the audio player on this page, but if you want to subscribe for free to get every episode, here’s how:

If you’re on an iPhone or iPad, open the app called Podcasts, or just tap this link. You can also download an app like Overcast or Pocket Casts and search for “Uncanny Valley.” We’re on Spotify too.

Transcript

Note: This is an automated transcript, which may contain errors.

Sam Altman [archival audio]: We have been a misunderstood and badly mocked org for a long time. When we started and said we were going to work on a AGI, people thought we were batshit insane.

Michael Calore: Sam Altman is the CEO and one of the founders of OpenAI, the generative AI company that launched ChatGPT about two years ago, and essentially ushered in a new era of artificial intelligence. This is WIRED’s Uncanny Valley, a show about the people, power, and influence of Silicon Valley. Today on the show, we’re doing a deep dive on Sam Altman, from his Midwest roots to his early startup days, his time as a venture capitalist, and his rise and fall and rise again at OpenAI. We’re going to look at it all while asking, is this the man we should trust to guide our explorations into artificial intelligence, and do we even have a choice? I’m Michael Calore, director of consumer tech and culture here at WIRED.

Lauren Goode: I’m Lauren Goode. I’m a senior writer at WIRED.

Zoë Schiffer: I’m Zoe Schiffer, WIRED’s director of business and industry.

Michael Calore: OK. I want to start today by going back one year into the past, November 2023, to an event that we refer to as the blip.

Lauren Goode: The blip. We don’t just refer to it as the blip. That is actually the internal phrase that is used at OpenAI to describe some of the most chaotic three to four days in that company’s history.

[archival audio]: The company OpenAI, one of the top players in artificial intelligence, thrown into disarray.

[archival audio]: One of the most spectacular corporate fall-outs.

[archival audio]: The news on Wall Street today involves the stunning developments in the world of artificial intelligence.

Zoë Schiffer: It really started on November 17th, this Friday afternoon when Sam Altman, the CEO of the company, gets what he says is the most surprising, shocking, and difficult news of his professional career.

[archival audio]: The shock dismissal of former boss, Sam Altman.

[archival audio]: His firing sent shock waves through Silicon Valley.

Zoë Schiffer: The board at OpenAI, which at the time was a nonprofit, has lost confidence in him, it says. Despite the fact that the company is by all measures doing incredibly well, he’s out. He’s no longer going to lead the company.

Michael Calore: He’s effectively fired from the company that he cofounded.

Zoë Schiffer: Yeah. That immediately sets off a chain reaction of events. His cofounder and president of the company, Greg Brockman, resigns in solidarity. Microsoft CEO Satya Nadella says that Sam Altman is actually going to join Microsoft and lead an advanced AI research team there. Then we see almost the entire employee base at OpenAI sign a letter saying, “Wait, wait, wait. If Sam leaves, we’re leaving, too.”

[archival audio]: Some 500 of these 700-odd employees—

[archival audio]: … threatening to quit over the board’s abrupt firing of OpenAI’s popular CEO, Sam Altman.

Zoë Schiffer: Eventually there’s this back and forth tense negotiation between Sam Altman and the board of directors, and eventually the board then installs Mira Murati, the CTO, as the interim CEO. Then shortly after that, Sam is able to reach an agreement with the board and he returns as CEO and the board looks instantly different, with Brett Taylor and Larry Summers joining, Adam D’Angelo staying, and the rest of the board leaving.

Michael Calore: All of this happened over a weekend in the first couple of days of the following week. It ruined a lot of our weekends as technology journalists. I’m sure it ruined a lot of weekends for everybody in the generative AI industry, but for a lot of people who don’t follow this stuff, it was the first time that they heard of Sam Altman, first time maybe they heard about OpenAI. Why was this important?

Zoë Schiffer: Yeah. This was such an interesting moment, because it surprised me. Lauren, I’m curious if it surprised you that this story broke through on such a national scale. People went from not really knowing who Sam Altman was to being shocked and disturbed that he was fired from his own company.

Lauren Goode: I think at this moment, everyone was hearing about generative AI and how it was going to change our lives. Sam was now officially the face of this, and it took this chaos, this Silicon Valley drama to bring that to the forefront, because then in trying to understand what was happening with the mutiny, you got a sense of there are these people in these different camps of AI. Some who believe in artificial general intelligence, that eventually it’s going to totally take over our lives. Some who fall in the camp of accelerationists who believe that AI should just scale as quickly as possible, unfettered AI. Others who are a little bit more cautious in their approach and believe that there should be safety measures and guardrails put around AI. All of these things came into sharp focus through this one very long, chaotic weekend.

Michael Calore: We’re going to talk a lot about Sam on this episode, and I want to make sure that we get a sense of who he is as a person. How do we know him? How do we understand him as a person? What’s his vibe?

Zoë Schiffer: Well, I think Lauren might be the only one who’s actually met him. Is that right?

Lauren Goode: Yeah. I have met him a few times, and my first interaction with Sam goes back about a decade. He’s around 29 years old, and he is the president of Y Combinator, which is this very well-known startup incubator here in Silicon Valley. The idea is that all these startups pitch their ideas and they get a very small amount of seed funding, but they also get a lot of coaching and mentorship. The person who’s running YC is really this very glorified camp counselor for Silicon Valley, and that was Sam at the time. I remember talking to him briefly at one of their YC demo days in Mountain View. He exuded a lot of energy. He’s clearly a smart guy. He comes across as friendly and open. People who know him really well will say he’s really one of the most ambitious people who they know. But at first glance, you wouldn’t necessarily think that he’s the person, fast-forward 10 years later, meeting with prime ministers and heads of state around the world to talk to them about his grand vision of artificial intelligence, and someone who’s really positioning himself to be this power monger in artificial intelligence.

Zoë Schiffer: Yeah. I think one of the interesting things about Sam is just that he seems like such an enigma. People have a really hard time, myself included, putting a finger on what is he all about. Should we trust this guy? I think there are other CEOs and executives in the Valley who have such brash personas, Elon Musk, Marc Andreessen, that you kind of instantly feel one way or another about them. You either really like them or you’re really turned off by them, and Sam is somewhere in the middle. He seems a little more quiet, a little more thoughtful, a little more nerdy. But there’s this sense of, like Lauren said, an appetite for power that makes you pause and think, “Wait. What is this guy all about? What’s in it for him?”

Lauren Goode: Right. He also wears a lot of Henley shirts. Now, I know this is not a fashion show. People who listen to our very first episode—

Zoë Schiffer: But it’s not not.

Lauren Goode: … might be like, “Are they just going to talk about hoodies every episode?” But he wears a lot of Henleys and jeans and nice sneakers, except for when he’s meeting with heads of state, where he suits up appropriately.

Zoë Schiffer: Sam, we have notes on the outfits if you want them, so call us.

Michael Calore: Well, Zoe, to your point, Paul Graham, who was at one time the head of Y Combinator, the incubator that Lauren was just talking about, described Sam as extremely good at becoming powerful. It seems like he’s somebody who can read the situation and read the room and figure out where to go next before other people can figure it out. A lot of people point to parallels between Sam Altman and Steve Jobs. I feel like Steve Jobs was somebody who had a vision for the future and was able to communicate why it was important, and he had a consumer product that got people very, very excited. Sam Altman is also somebody who has a vision for the future, is able to communicate why it’s important to the rest of us, and has a product that everybody is excited about in ChatGPT. I think that’s where the parallel ends.

Lauren Goode: I think just the question of is Sam Altman the Steve Jobs of this era a really good one to ask? You’re right in that they were both effectively the salespeople for products that other people made. They’re in a sense marketers. Jobs marketed the smartphone, which literally changed the world. Sam has helped productize AI, this new form of generative AI through ChatGPT. That’s a similarity. They’re both or were both extremely ambitious, enigmatic, as Zoe said, reportedly impatient. They have some employees who would follow them to the end of the earth and others who have gone running scared. They’ve both been ousted from companies they were running and then returned. Although Sam’s absence was infinitely shorter than Steve Jobs’ return to Apple. I think there are some key differences though. One is that we have the benefit of hindsight with Jobs. We know what he accomplished, and we have yet to see if Altman is really going to be a defining character of AI for the next 10 or 20 or 30 years. The second thing is that while Jobs had some very real personality quirks and complexes by all accounts, I tend to see him as being put into this messianic position by his acolytes. Whereas Sam seems to really, really want to elevate himself to that position, and there are some people who are still really skeptical about that.

Zoë Schiffer: That’s really interesting. Just to double-click, as the tech people say, on the salesperson analogy, that can sound a little reductive as well, but I actually think it’s a really important point, because large language models have existed. AI has existed to some extent. But if people, regular users don’t know how to use those products or interact with them, do they really break out and change the world in the way that people like Sam Wollman think they will? I would say no. His role in launching ChatGPT, which is I think by all accounts not an incredibly useful tool, but points to future uses of AI and what they could be and how it could interact with people’s everyday lives. That is a really important change and an important impact that he’s had.

Michael Calore: Yeah. Lauren touched on this point, too. We don’t know what the impact is going to be. We don’t have the benefit of hindsight here, and we don’t know whether these promises about how artificial intelligence is going to transform all of our lives, how those are going to play out. There are a lot of people who are very skeptical about AI, particularly artists and creative people, but also people who work in surveillance and security and the military have a fear and a healthy skepticism around AI. We’re looking at this figurehead as the person who’s going to lead us into the future where this becomes the defining technology of that future, so the question becomes, can we trust this person?

Lauren Goode: I feel like Sam’s feedback to that would be, “No. You shouldn’t trust me, and you shouldn’t have to.” He has said in interviews before that he’s trying to set up the company where he doesn’t have quite as much power. He doesn’t have total control in that way. He has definitely hinted that he thinks a lot of the decisions governing AI should be democratic. I think it’s just again, this question of do we trust those statements or do we trust more what he’s doing, which is consolidating power and leading a for-profit company, what used to be a nonprofit.

Michael Calore: Yeah. We should underscore that, that he’s always encouraging healthy debate. He encourages open dialog about what the limits of AI should be, but that still doesn’t seem to be satisfying the people who are skeptical of it.

Lauren Goode: Yeah. I think it’s also important to make the distinction between people being skeptical of it and people who are fearful of it, too. There are people who are skeptical about the tech itself. There are people who are skeptical that Sam Altman may be the figurehead that we need here. Then there’s fear of the tech from some people who actually do believe in its potential to revolutionize the world, and the fear is that that may not happen necessarily in a good way. Maybe someone will use AI for bio-terrorism. Maybe someone uses AI to launch nukes. Maybe the AI itself becomes so all-knowing and powerful that it somehow becomes violent against humans. Those are some real fears that research scientists and policymakers have. The questions about Sam’s trustworthiness aren’t only from, let’s call it a capital perspective. What’s going on in terms of is OpenAI a nonprofit or a for-profit, or can we trust this man with billions of dollars in funding? It’s literally can we trust him in a sense with our lives?

Zoë Schiffer: Right. It’s the degree to which we should be worried about Sam Altman is the degree to which you personally believe that artificial general intelligence is a real concern or that AI could significantly change the world in a way that could be potentially quite catastrophic.

Lauren Goode: Altman was quoted in a New York magazine profile about him as saying AI is not a clean story of only benefits. Stuff is going to be lost here as it develops. It’s super relatable and natural for people to have loss aversion. They don’t want to hear stories in which they’re the casualty.

Michael Calore: We have a self-appointed figurehead whether we like it or not, and we have to make decisions about whether or not this is somebody we can trust. But before we get there, we should talk about how Sam got to where he is today. What do we know about Sam Altman the man, before he was Sam Altman the tech founder?

Lauren Goode: Well, he’s the oldest of four children. He comes from this Midwestern Jewish family. He grew up in St. Louis. His family, by all accounts, he had a pretty good childhood, I believe. They hung out together. They played games. I think his brother has said that Sam always needed to win those games. In high school, he comes out as gay, which was pretty unusual at the time. He said that his high school was actually quite intolerant toward gay people. At one point he gets up on stage at his school, according to this New York Magazine profile, and gives a speech about the importance of having an open society. My takeaway from that was that he was willing from an early age to be different from other people and to reject the dominant ideology. There was also this really funny section in the profile where they said that he was a boy genius, and at age 3 was fixing the family’s VCR. As the mom of a 3-year-old, I was like, what the fuck?

Michael Calore: I was resetting the clock on the family VCR by 11, and I just want to say that I’m also a boy genius.

Lauren Goode: It’s funny with profiles of some of these founders, because I do feel like there’s this hagiographic tendency to be like, “Of course, they were a child genius, too.” No one is ever just mid in childhood and then ends up being a billionaire. There’s always something special.

Michael Calore: Right. Well, there was something special about Sam, that’s for sure.

Lauren Goode: Yeah. He starts at Stanford in 2003. This is the time period when other young, ambitious people are starting companies like Facebook and LinkedIn. I think if you were smart and ambitious like Sam clearly was, you weren’t going to go into the traditional law, doctor type fields. You were going to try and start a company, which is what he does.

Zoë Schiffer: Yeah. He starts this company called Looped. He’s a sophomore at Stanford. He teams up with his then boyfriend, and they create what’s basically like an early Foursquare. This is when Sam gets involved with Y Combinator for the first time. He and his cofounder receive a $6,000 investment. They’re accepted into a summer founders program at Y Combinator, and this is where they get to spend a few months incubating their app with mentorship. They’re with a bunch of other nerds. There’s this great detail about how Sam worked so much during that time period that he got scurvy.

Lauren Goode: Oh, my God. That also feels like mythologizing.

Zoë Schiffer: It really does. Fast-forward a few years, it’s 2012. Looped has raised about $30 million in venture capital, and the company announces that it’s going to be acquired by another company for around $43 million. This sounds like a lot of money to people who don’t start apps and sell their companies, but by Silicon Valley standards, this is not really deemed a success. Sam is comfortable. He’s able to travel the world a bit, find himself, think about what he wants to do next, but there’s still a lot of ambition there, and we’re still yet to see the real Sam Altman.

Lauren Goode: Does money seem like it’s a motivating force for him, or what is he after during this time period?

Zoë Schiffer: Yeah. That’s a really good question, and this I think is maybe emblematic of his personality, because he doesn’t just stop there. He’s still ruminating on a lot of things. He’s still thinking a lot about technology. Then in 2014, he was tapped by Paul Graham to be president of Y Combinator, so he’s immersed himself in a bunch of other technologists with all these new ideas. Around 2015, as he’s thinking about all this stuff, that’s really when we have the seeds of OpenAI.

Michael Calore: Let’s talk about Open AI. Who are the cofounders? What do the early days of the company look like, and what is its mission when it takes off?

Lauren Goode: Originally, open AI is founded as this group of researchers who are coming together to explore artificial general intelligence. A group of cofounders, including Sam and Elon Musk, set up OpenAI as a nonprofit. They don’t really have a conception of having a commercial component to it or a consumer-facing app involved. It really does feel like a research org at this point, but Elon Musk in classic Elon Musk form wants to wrest more control away from the other cofounders. He tries to take it over repeatedly and floats the idea that Tesla could acquire OpenAI, and the rest of his founders reject this idea allegedly, and so ultimately Musk walks away and Sam Altman gets control of the company.

Michael Calore: I think it’s important to also make the distinction that there were a lot of companies working on artificial intelligence at this time, about eight or nine years ago, and OpenAI saw themselves as the good guys in the group.

Lauren Goode: That’s the open.

Michael Calore: Yeah. Basically, if you’re building artificial intelligence tools, they may be harnessed by military. They may be harnessed by bad actors. They may get to the point where they become dangerous, like some of the dangers that Lauren was talking about earlier, and they saw themselves as the people who are going to do it the right way. People are going to do it so that AI can benefit society and not harm society. They wanted to make their tools available for free to as many people as possible, and they really wanted to make sure that this wasn’t just a closed box that ended up just making a few people a bunch of money while everybody else sat on the outside.

Lauren Goode: That was how they conceived of being good. It was the democratization factor more than the trust and safety factor. Is that fair? Were they sitting around being like, “We’re really going to study the harms, all of the potential misuses?” Or was it more like, “We’re going to put this in people’s hands and see what they do with it?”

Zoë Schiffer: That’s a good question. I think they saw themselves as “value aligned.”

Michael Calore: Yes. That’s a term that they all use.

Zoë Schiffer: Yeah. In 2012, there was something called AlexNet that was this convolutional neural network. It was able to identify and classify images in a way that hadn’t been done before by AI. It blew people’s minds. You can hear Nvidia CEO Jensen Huang talk about how that convinced him to change the company’s direction towards AI, so a big moment. Then in 2017, there were some Google researchers that worked on this paper. It’s known as the attention paper now that basically defined the modern day transformers that make up the T in ChatGPT. You’re right, Mike, there were these groups and companies that were hopping on the train, and OpenAI from the beginning was like, “We definitely want to be on that train, but we think that we’re more value-aligned than the others.”

Michael Calore: Something that they realized very quickly is that if you’re building artificial intelligence models, you need massive amounts of computing power, and they did not have the money to buy that power. That created a shift.

Lauren Goode: They turned to daddy Microsoft.

Zoë Schiffer: Well, they try and raise as a nonprofit, and Sam says it’s just not successful, and so they’re forced to take on a different model, and the nonprofit starts to morph into this other thing that has a for-profit subsidiary. OpenAI starts to look weird and Frankenstein even in its early years.

Lauren Goode: Yeah. By the early 2020s, Sam had left Y Combinator. OpenAI was his full-time thing. They had created this for-profit arm, and then that enabled them to go to Microsoft, daddy Warbucks, and raise, I think, a billion dollars to start.

Michael Calore: Then what is Sam’s own journey during this time? Is he investing? Is he just running this company?

Lauren Goode: He’s meditating.

Michael Calore: He’s very into meditation.

Lauren Goode: Just meditating.

Zoë Schiffer: In classic founder, venture capitalist kind of fashion, he’s investing in a bunch of different companies. He’s poured $375 million into Helion Energy, which is a speculative nuclear fusion company. He’s put $180 million into Retro Biosciences, which is looking at longevity and how people can live longer. He’s raised $115 million for WorldCoin, which Lauren, you went to another events recently, right?

Lauren Goode: Yeah. WorldCoin is a pretty fascinating company, and I think in some ways is also emblematic of Sam’s approach, ambition, personality, because what they’re creating is, well, there’s an app, but there’s also an orb. A physical orb like a ball that you’re supposed to gaze into, and it captures your irises, and then it transforms that into an identity token that puts it on the blockchain. The idea that Sam has expressed is that, “Look, in the not-so-distant future, there’s going to be so much fakeness out there in the world because of AI, and people are going to be really easily able to spoof your identity thanks to the AI I’m building. Therefore, I have a solution for you.” His solution is this WorldCoin, now just called World product. Here’s the man who is accelerating the development of AI and saying, “Here are the potential perils, but also I have the solution, folks.”

Zoë Schiffer: I’m pro anything that lets me not remember my many, many passwords, so you can scan my iris, Sam.

Lauren Goode: What else was he investing in?

Zoë Schiffer: During this period, he’s also getting rich. He’s buying fancy cars. He’s racing those fancy cars. He gets married. He says he wants to have kids soon. He purchases a $27 million house in San Francisco, and then he’s pouring a ton of energy into OpenAI and specifically launching ChatGPT, which is going to be the commercial face of what was previously a nonprofit.

Lauren Goode: Yeah. That’s really a watershed moment. It’s the end of 2022, and all of a sudden people have a user interface. It’s not just some LLM that people don’t fully understand or comprehend that’s working behind the scenes. It’s something that they can go to on their laptop or on their phone and type in and then have this search experience that feels very conversational and different from the search experience that we’ve known and understood for the past 20 years. Sam is the face of this. The product events that OpenAI starts hosting intermittently start to feel a little bit like Apple events in the way that people, us, tech journalists are covering it. Then the next year in 2023, before the blip, Sam goes on this world tour. He’s meeting with prime ministers and heads of state, and what he’s doing is he’s calling for a very specific kind of regulatory agency for AI. He has decided that if AI continues to grow in its influence and power, that it’s going to be regulated at some point potentially, and that he wants to be a part of that conversation. Not just a part of that conversation. He wants to have control over what that framework looks like.

Zoë Schiffer: Right. You pointed to this earlier, but there is this ongoing debate about artificial general intelligence and the idea that AI will become sentient at some point and maybe even escape the box and turn against us humans. Sam recently said that this isn’t actually his top concern, which I think is a little concerning. But he said something smart, I thought, which was that there’s a lot of harm that can be done without reaching AGI, like misinformation and political misuses of AI. They don’t really need artificial intelligence to be that intelligent in order to be very, very destructive

Michael Calore: Yeah. Job loss. We should also mention AI’s impact on labor, because a lot of companies are eager to cut costs and they buy into an AI system that automates some of the work they used to rely on humans to do. Those humans lose their jobs. The company realizes that maybe the AI tool that they’re relying on is not as good as the humans. Maybe it actually does the job better.

Zoë Schiffer: We’re already seeing somewhat. I feel like Duolingo laid off a bunch of their translators and is investing a lot of money in AI right now.

Lauren Goode: That’s a real bummer, because I thought my next career would be a Duolingo translator.

Zoë Schiffer: It’s a bummer, because I’m looking at the Duolingo owl right behind your head, so we know you’re in bed with Duolingo.

Lauren Goode: There really is one in the studio here. Duolingo sent me some owl heads. I just love Duolingo.

Michael Calore: You know who loves Duolingo?

Lauren Goode: Who? Who? That was an owl joke, folks. This podcast is over. Oh, I love it so much. Back to Sam Altman. You’re correct, Zoe. One of the most interesting parts about Sam going around the world talking to politicians and heads of state about how to regulate AI is that there’s this assumption that it is just one overarching solution for how to regulate it as opposed to seeing what comes up and the different needs we have in different spaces for how the technology is actually going to work.

Zoë Schiffer: That’s one criticism he’s gotten from people like Mark Andreessen who say he’s going for regulatory capture. They’re very suspect of his efforts to be part of the regulatory push of AI, because obviously he’s invested in seeing that regulation look a certain way. One other thing that he says, which I think is quite interesting and a bit self-serving, is that some of the things that look like they’re separate, or as tech people like to say, orthogonal to AI safety are actually really closely related to AI safety. When we look at human reinforcement of models, so the idea that you get two different responses to your prompt and you as a human vote on which one was more helpful. That can make the model a lot smarter, a lot faster, but it also can make the model more aligned to our societal values in theory.

Michael Calore: That pretty much brings us back to the scene that we outlined at the beginning, the blip when Sam was fired, and then four or five days later got his job back. Well, now that Sam Altman has been back at the helm of OpenAI for about a year, it has been an eventful year, and part of that is because all eyes are on the company. We’re paying extra close attention to everything that we’re doing, but it’s also due to the fact that this company is making a massively impactful piece of technology that touches so many different things in our world. Let’s very quickly go through what the last year has been like with Sam back at the driver’s seat.

Zoë Schiffer: Yeah. It’s not even, I would say just that all eyes are on the company and that they’re building these massively impactful products. It’s also that OpenAI is a messy company. Its executives are constantly leaving. They’re constantly starting other companies that are even more overtly safety conscious supposedly than OpenAI.

Lauren Goode: They have these really funny names, the people who leave. They’re like, “I’m starting a new startup. It’s brand new. It’s called the anti-OpenAI safety alignment measures OpenAI never took company.”

Zoë Schiffer: The super, super, super, super safe non-OpenAI safety company. Just to start with some of the copyright claims, one of the major points of contention with what Sam is building and what AI companies are building is that in order to train a large language model, you need a shit ton of data. A lot of these companies are allegedly scraping that data from the open web. They’re taking artists’ work without their permission. They’re scraping YouTube in what might be an illegal fashion. They’re using all of that to train their models, and they’re often not crediting the sources. When ChatGPT 4.0 comes out, it has a voice that sounds remarkably like Scarlett Johansson’s voice in the movie Heart. Scarlett Johansson gets really upset about this, and she almost sues the company. She says that Sam Altman came to her directly and asked her to participate in this project to record her voice and be the voice of Sky, which is the voice of ChatGPT 4.0, and she said no. She thought about it and then she didn’t feel comfortable doing it. She felt like the company had gone ahead and used her voice without permission. It comes out that that wasn’t actually the case. It seems like they just hired an actor that sounded remarkably like her, but again, messy. Messy, messy, messy.

Michael Calore: All right. Let’s take another break and we’ll come right back. Welcome back. On one side, we have all the mess. We have the FTC looking into violations of consumer protection laws. We have lawsuits and deals being signed between media companies and other people who publish copyrighted work.

Lauren Goode: Is this where we do the disclaimer?

Michael Calore: Oh, yes. Including Conde Nast.

Lauren Goode: Including Conde Nast, our parent company.

Michael Calore: Our parent company has signed a deal with OpenAI, a licensing deal so that our published work can be used to train its models. There are safety and culture concerns. There’s the fact that they’re being fast and loose with celebrity impressions. Then on the other side, we have a company that is making a big important technology that many in the industry are throwing tons of money and tons of deals behind in order to prop it up and make sure that it accelerates as quickly as possible. We’re in this position as users where we have to ask ourselves, do we trust this company? Do we trust Sam Altman to have our best future in mind when they’re rolling this stuff out into the world?

Lauren Goode: Knowing fair well that this very podcast is going to be used to train some future voice bot in OpenAI.

Michael Calore: It’ll sound like all three of us mixed together.

Lauren Goode: Sorry about the vocal fry.

Zoë Schiffer: I would listen to that.

Lauren Goode: Right now, there’s this equation that is being made as we use not just ChatGPT, not just going to ChatGPT and typing in a query and training the model that way. Just our data being on the internet is being used to train these models in many instances without anyone’s consent, and it just feels like this moment. It requires so much intellectual or existential gymnastics of living a life online right now to think about whether what you’re getting from this tech is actually as great as what you have to put into it, what you are putting into it. I personally have not gotten a lot of benefit from using apps like ChatGPT or Gemini or others. That could change. It could absolutely change. There are a lot of instances of AI that I use in my day-to-day life right now, embedded in my email application and my phone and all this stuff that actually are really great, have absolutely benefited me. These new models of generative AI tools, it’s still a giant TBD, and yet I know that I’ve already given more to these machines. Is Sam Altman the singular person who I look to and I’m like, “I trust this guy with that, with figuring out that equation for me?” No.

Michael Calore: No. What about you, Zoe?

Zoë Schiffer: I would say no, I don’t think he’s proven himself to be particularly trustworthy. If the people who work closely with him are leaving and starting their own things that they say will be more trustworthy, then I think that speaks to something we should be somewhat concerned about. At the same time, I don’t know if there’s any single person that I would trust with this. It’s a lot of power and a lot of responsibility to have in one fallible human being.

Lauren Goode: Yet I definitely have met and reported on tech entrepreneurs who I think have a pretty straight moral compass, who are being very thoughtful about what they build, who have been thoughtful about what they build. I’m not saying that it’s like, “Oh, tech bro bad.” He’s definitely not the guy. He may emerge as that person, but at this moment right now, no.

Zoë Schiffer: Yeah. I will say that he does seem very thoughtful. He doesn’t seem like an Elon Musk in the way that he’s just making impulsive decisions. He seems to really think through things and take the power and responsibility that he has pretty seriously.

Lauren Goode: He did manage to raise $6.6 billion from investors just a month or so ago, so there are clearly a lot of people in the industry who do have a certain level of faith in him. It doesn’t mean they have faith that he’s going to handle all this data in the best way possible, but it certainly means they have faith that he’s going to make a lot of money through ChatGPT.

Zoë Schiffer: Or they’re just really concerned about missing out.

Lauren Goode: They have a lot of FOMO. They’re looking at the subscription numbers for ChatGPT, but they’re also seeing a lot of potential for growth in the enterprise business, the way that ChatGPT will license its API or work with other businesses so that those businesses can create all these different plugins in their day-to-day applications and make their workers more productive and stuff. There’s a lot of potential there, and I think that’s what investors are looking at right now.

Michael Calore: This is normally the point in the podcast where, as the third person in the room, I provide a balanced perspective by taking a different tack than the two of you. But I’m going to throw that out the window because I also do not think that we should be trusting Sam Altman or OpenAI with open arms. Now, there are things that the company is doing that I think are very good, like the idea of a productivity tool or a suite of productivity tools that can help people do their jobs better. It can help you study. It can help you understand complex concepts. It can help you shop for things online. Those are all interesting. I’m particularly very curious to see what they do with search when their search tool becomes more mature, and we can really see whether or not it’s a challenge to this paradigm for search that has existed for almost 20 years at this point. Capital G. Google. Beyond that, I don’t think that their tools are going to have an impact on society that is going to be a net positive. I think there is going to be enough strife in the job loss and in the theft of copyrighted work and in energy use and water use that are required to run all these complex models on the cloud computing data centers. Then of course, misinformation and deep fakes, which now are pretty easy to spot and are getting harder to spot, and within a couple of years will become indistinguishable from actual footage and actual news. I feel like as human beings on the internet, we are going to bear the brunt of these bad things that are coming. To your point, Lauren, as journalists, we are going to be collateral damage in this race to see who can create the model that does our jobs for us the best, and OpenAI is the leader there. This pursuit of profit without really looking at those problems head on sounds dangerous to me. We talked about earlier in the episode, Sam Altman has said that he encourages open debate and he encourages us as a society to decide what the boundaries of this technology should be. But I think that we’re moving way too quickly and the debate is happening way too slowly. That feels like an avoidant stance to say, “Oh, don’t worry. We’re going to figure it out as a collective.” While at the same time you’re pressing on the accelerator pedal as hard as you can to go as fast as possible and spend as much money as possible. Those things feel out of sync.

Zoë Schiffer: Yeah. He speaks very generally when he talks about how everyone should have a voice in these decisions regarding how AI is built and how it’s governed. One thing when you mentioned job loss that it made me think of, and we’ve mentioned this on a previous podcast episode, is that Sam Altman is involved in universal basic income experiments. The idea that you give people a fixed amount of money every month, and hopefully that mitigates any job loss that’s involved in your other projects.

Lauren Goode: I think that we’re in this moment generally, technology and society, where we may be forced to let go of some of the institutions that we’ve relied on for the past few decades. Technologists are often the first people to jump on that and say, “We have a better idea. We have a better solution. We have a better idea for government. We have a better idea for how people should get paid and make money. We have a better idea for how you should be doing your work and what can make you more productive. We have all these ideas.” They’re not always bad ideas, and at some point we do have to let go. Change happens. It’s inevitable. What is it? It’s not death and taxes. It’s change and taxes. That’s the inevitable. Also, death.

Zoë Schiffer: Lauren is part of the DOGE commission and she’s coming for your institution.

Lauren Goode: Yes. But then you have to also identify the right people to affect that change. That is, I think, the question that we’re asking. We’re not asking are these bad ideas? We’re asking who is Sam Altman? Is this the person who should be steering this change, and if not him, then who?

Zoë Schiffer: But Lauren, just to push back on that, he is the person. At a certain point, we’re living in a fantasy if we three tech journalists are just sitting here talking about, should it be Sam? Should it not be? Well, he’s doing it and it doesn’t look like he’s going away anytime soon, because the board was not in a real sense able to push him out despite the fact that it legally had that right. He’s still CEO.

Lauren Goode: Yeah. He’s entrenched at this point, and the company is entrenched simply just based on how much money is invested in them. There are a lot of stakeholders who absolutely are going to make sure that this company succeeds, no doubt. But also, if we’re at the early-ish phase of generative AI in the way that other transformative technologies have had their early-ish stages, and then sometimes there are other people in companies that emerge that actually end up doing more.

Michael Calore: What we’re hoping for is corrective forces.

Lauren Goode: Maybe. We’ll see.

Zoë Schiffer: Okay. I stand corrected then. I feel like maybe it is worth having this discussion on who should lead it. We’re early days still, I feel like. I lose sight of that sometimes.

Lauren Goode: That’s okay. You may be right.

Zoë Schiffer: Because it feels like he’s the dominant player.

Michael Calore: That’s the best part about reporting on technology is that we’re always in the early days of something,

Lauren Goode: I suppose so.

Michael Calore: All right. Well, that feels like as good of a place as any to end it. We’ve solved it. We should not trust Sam Altman, but we should trust the AI industry to self-correct.

Zoë Schiffer: Yeah. I think of this quote that Sam wrote on his blog many, many years ago, and he said, “A big secret is that you can bend the world to your will a surprising percentage of the time, and most people don’t even try. They just accept the way things as they are.” I feel like that says a lot about him. It also makes me think, Lauren, to your point, that I’ve accepted, well, Sam Altman is just in charge, and that’s just the reality. Well, maybe the world needs to bend things a little to our will in a democratic fashion, not let him just lead the new future.

Lauren Goode: Never succumb to inevitability.

Michael Calore: Now, that really is a good place to end it. That’s our show for today. We’ll be back next week with an episode about whether or not it’s time to get off social media. Thanks for listening to Uncanny Valley. If you like what you heard today, make sure to follow our show and rate it on your podcast app of choice. If you’d like to get in touch with any of us to ask questions, leave comments, give us some show suggestions, you can write to us at uncannyvalley@WIRED.com. Today’s show is produced by Kyana Moghadam. Amar Lal at Macro Sound mixed this episode. Jordan Bell is our executive producer. Conde Nast’s head of global audio is Chris Bannon.

Source : Wired